研究方向

返回主页

多模态交互

我们的多模态交互研究致力于整合手势、语音、姿态等多种输入通道,构建更加自然、高效的人机交互方式。该研究主要涵盖两个方向:脚本化多模态交互方案设计与自然多模态行为理解。

其中,脚本化多模态交互方案是指由设计者预先制定的交互方式,例如通过特定手势配合语音命令完成目标选择与任务执行。在此类交互中,用户需要遵循既定规则,使用指定的多模态输入向系统传达意图。我们在该方向的研究重点在于探究用户在不同场景与任务下的多模态使用习惯与偏好,并分析用户对不同模态信息的语义理解能力及其编码空间,从而为多种交互功能设计可解释、可学习、可推广的脚本化多模态交互方案。

另一方面,自然多模态行为理解则强调允许用户以更加自由的方式使用多模态行为与系统交互。相比脚本化方案,该方式更加符合用户直觉,交互过程自然流畅,且几乎不存在额外学习成本。然而,这也对多模态行为识别与意图推断算法提出了更高要求。为此,我们从用户自然多模态行为的采集与分析出发,结合传统机器学习方法与大语言模型,构建能够理解自然多模态表达的智能交互模型,以支持更具鲁棒性与泛化能力的人机交互系统。

相关发表

Lesong Jia, Xiaozhou Zhou, Chengqi Xue . Non-trajectory-based gesture recognition in human-computer interaction based on hand skeleton data. Multimedia Tools and Applications , 2022.

Lesong Jia, Xiaozhou Zhou, Hao Qin, Ruidong Bai, Liuqing Wang, Chengqi Xue . Research on discrete semantics in continuous hand joint movement based on perception and expression. Sensors , 2021.

Xiaozhou Zhou, Lesong Jia, Ruidong Bai, Chengqi Xue . DigCode—a generic mid-air gesture coding method on human-computer interaction. International Journal of Human-Computer Studies , 2024.

Xiaoxi Du, Lesong Jia, Weiye Xiao, Xiaozhou Zhou, Mu Tong, Jinchun Wu, Chengqi Xue . Speech+ Posture: A Method for Interaction with Multiple and Large Interactive Displays. Intelligent Human Systems Integration (IHSI 2022) , 2022.

Ming Hao, Zhou Xiaozhou, Xue Chengqi, Xiao Weiye, Jia Lesong . The Influence of the Threshold of the Size of the Graphic Element on the General Dynamic Gesture Behavior. International Conference on Human Systems Engineering and Design , 2019.

机器人交互

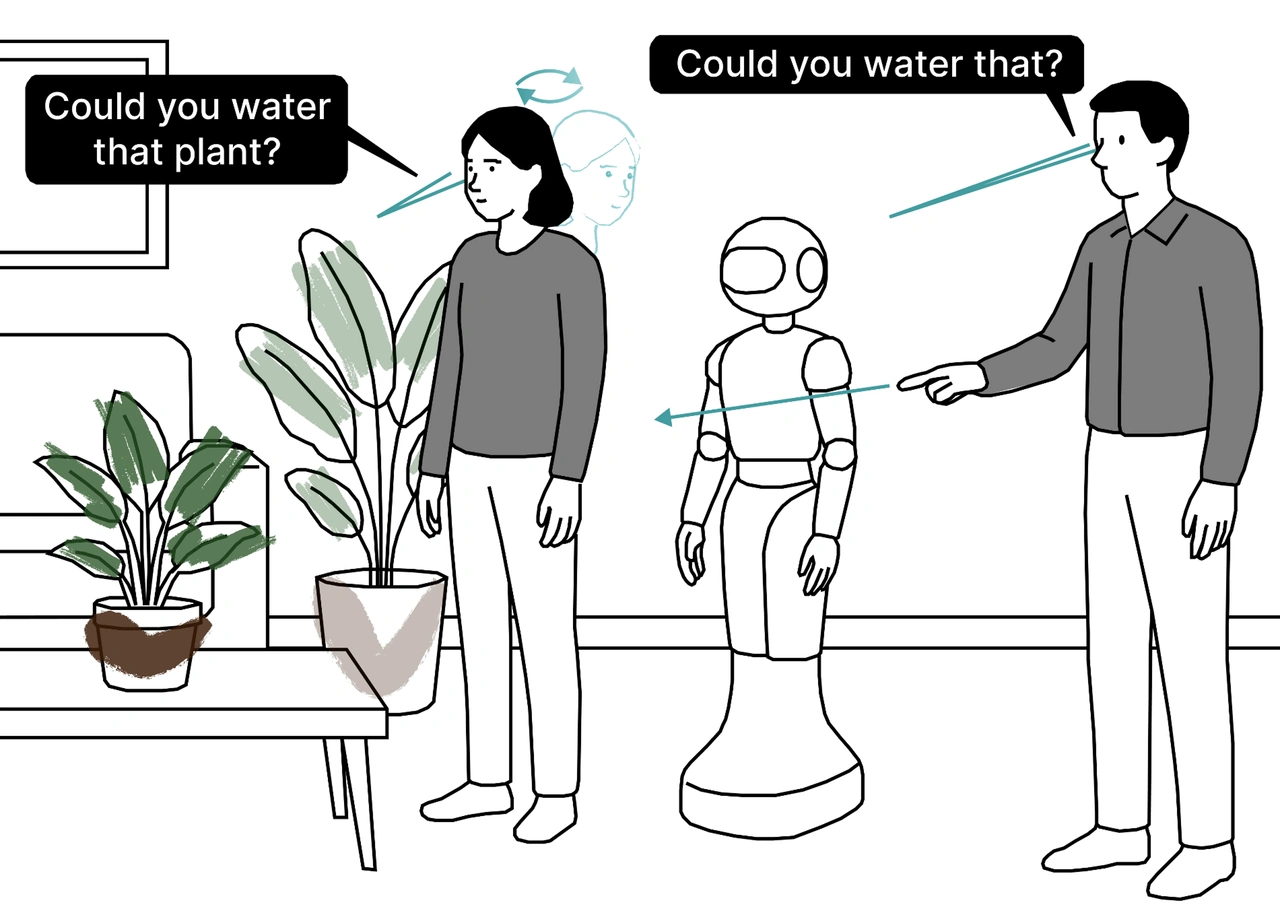

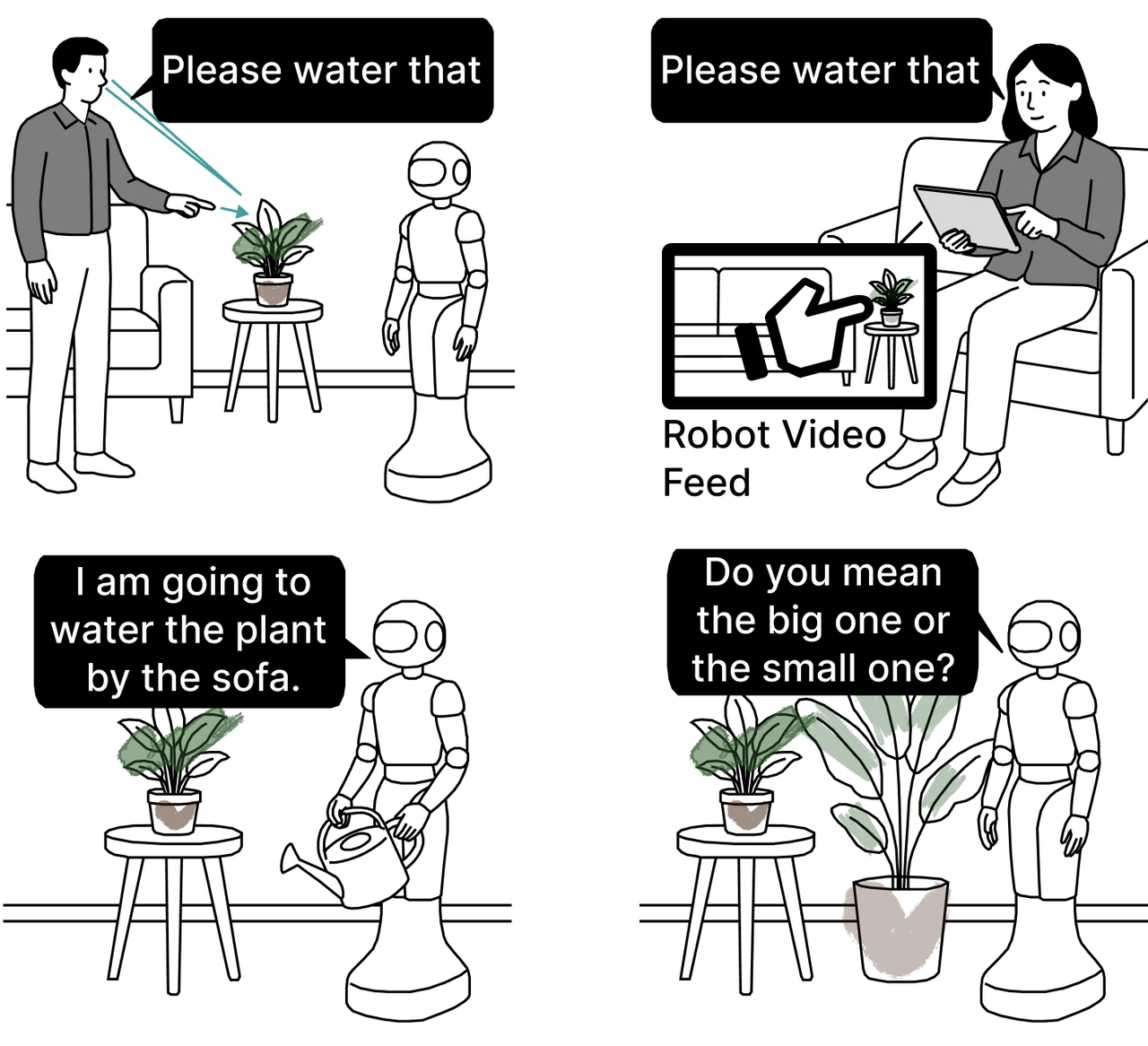

我们的机器人交互研究关注以用户为中心的沟通机制,旨在通过合理的交互与反馈形式提升机器人系统的可用性、可理解性与可信度,使其更贴合用户需求与使用习惯。

在具体研究中,我们重点探讨用户在与机器人协作完成任务时对功能能力与界面信息呈现的核心期待,并总结用户在交互过程中遇到的主要困难与决策负担。我们通过用户研究分析机器人系统在任务规划、执行反馈、操作透明度与信息呈现方式上的设计要点,并进一步研究不同反馈模态(如视觉、听觉等)在机器人控制与交互中的偏好差异及其对交互效率、工作负荷与用户体验的影响,从而为更自然、更高效的人机协作界面设计提供实证依据与设计建议。

此外,我们将情绪因素作为影响人机交互质量的重要维度,系统研究机器人情绪表达与情绪调节策略对用户感受与行为的作用机制。我们重点探讨不同情绪调节方式(如共情式与非共情式调节)在不同情境下对用户情绪变化、信任形成、建议采纳倾向以及整体交互评价的影响,并通过视频与仿真任务等实验范式建立分析框架,为构建更具社会智能与情感适应能力的机器人交互策略提供理论与实验支持。

相关发表

Lesong Jia, Jackie Ayoub, Danyang Tian, Miao Song, Ehsan Moradi Pari, Na Du . Robots with Heart: How Emotion Regulation Shapes Human-Robot Interaction. International Journal of Social Robotics , 2026.

Lesong Jia, Breelyn Kane Styler, Na Du . More Than Automation: User Insights into the Functionality and Interface of Wheelchair-Mounted Robotic Arms. 2025 20th ACM/IEEE International Conference on Human-Robot Interaction (HRI) , 2025.

Lesong Jia, Jackie Ayoub, Danyang Tian, Miao Song, Ehsan Moradi Pari, Na Du . Emotion Regulation in Human-Robot Interaction: Insights from Video and Game Simulations. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2025.

Breelyn Kane Styler, Lesong Jia, Henny Admoni, Reid Simmons, Rory Cooper, Na Du, Dan Ding . Evaluating Feedback Modality Preferences of Power Wheelchair Users During Manual Robotic Arm Control. 2025 International Conference On Rehabilitation Robotics (ICORR) , 2025.

态势感知

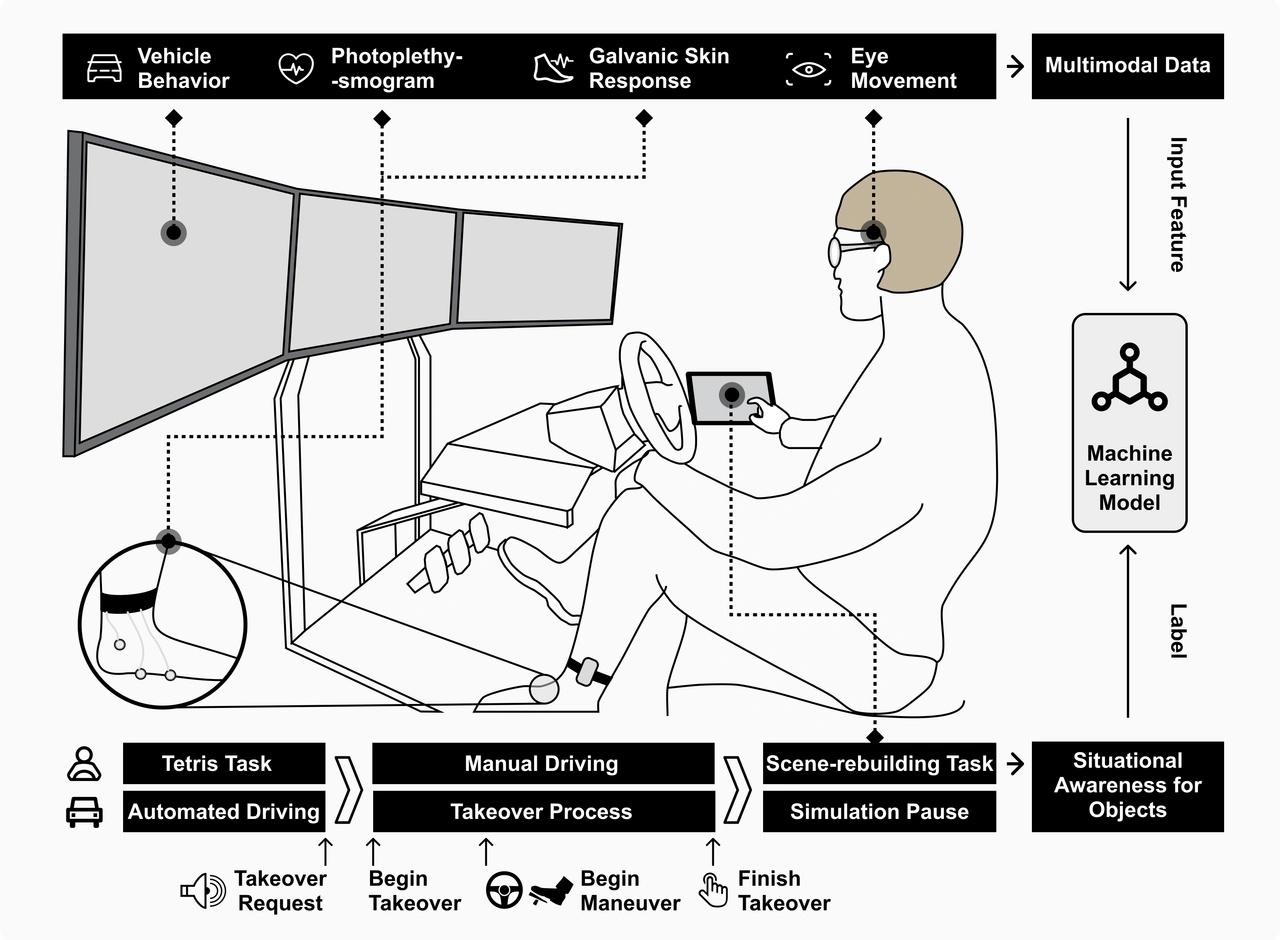

态势感知指的是个体在动态环境中对关键信息的感知、理解以及对未来状态的预测能力。在自动驾驶接管、无人机侦察等高认知负荷、高信息密度的应用场景中,用户是否具备准确的态势感知往往直接影响其决策质量与任务安全性。因此,如何识别并提升用户的态势感知水平成为智能系统设计中的关键问题。我们的研究主要聚焦于两个方向:态势感知状态预测以及通过界面设计提升态势感知水平。

首先,我们构建了一个面向态势感知预测的综合框架,系统性地整合了用户能力、用户状态、用户行为以及场景态势等多类影响因素。在该框架下,我们将用户年龄、性别、驾驶经验等个体差异特征,以及眼动、心电、皮电等生理与行为信号,与环境中关键目标的尺寸、颜色等场景特征相结合,构建态势感知状态预测模型。该模型能够帮助系统识别用户可能缺失或误判的态势信息,并进一步生成针对性的提示与辅助信息,从而支持更及时、更可靠的决策。

进一步地,我们研究不同类型的用户界面在多大程度上能够帮助用户更有效地掌握态势信息。相关研究涵盖界面信息呈现的内容设计、呈现模态(如视觉、听觉或多模态融合)以及信息组织与表达方式等多个维度。通过用户实验与统计分析,我们致力于总结不同任务场景下的界面设计规律,并提出可推广的态势感知辅助界面设计建议,以提升用户的理解效率、降低认知负荷,并增强任务安全性与整体交互体验。

相关发表

Lesong Jia, Kiran Shridhar Alva, Huao Li, Werner Hager, Lucia Romero, Timothy Albert Butler, and Michael Lewis . Understanding Situation Awareness in Multi-UAV Supervision: Effects of Environmental Complexity, Interface Design, and Human–AI Differences. International Journal of Human--Computer Interaction , 2026.

Lesong Jia, Na Du . Modeling Driver Situational Awareness in Takeover Scenarios Using Multimodal Data and Machine Learning. International Journal of Human--Computer Interaction , 2025.

Lesong Jia, Na Du . Driver Situational Awareness Prediction During Takeover Transitions: A Multimodal Machine Learning Approach. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2024.

Lesong Jia, Anfeng Peng, Huao Li, Xuehang Guo, Michael Lewis . Situation Theory Based Query Generation for Determining Situation Awareness Across Distributed Data Streams. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Lesong Jia, Chenglue Huang, Na Du . Drivers' situational awareness of surrounding vehicles during takeovers: Evidence from a driving simulator study. Transportation Research Part F: Traffic Psychology and Behaviour , 2024.

Lesong Jia, Na Du . Effects of traffic conditions on driver takeover performance and situational awareness. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2023.

Yaohan Ding, Lesong Jia, Na Du . Watch Out for Explanations: Information Type and Error Type Affect Trust and Situational Awareness in Automated Vehicles. IEEE Transactions on Human-Machine Systems , 2025.

Yaohan Ding, Lesong Jia, Na Du . One size does not fit all: Designing and evaluating criticality-adaptive displays in highly automated vehicles. Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems , 2024.

Yaohan Ding, Lesong Jia, Na Du . Designing for trust and situational awareness in automated vehicles: effects of information type and error type. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2023.

Xiaoxi Du, Lesong Jia, Xiaozhou Zhou, Xinyue Miao, Weiye Xiao, Chengqi Xue . Trackable and Personalized Shortcut Menu Supporting Multi-user Collaboration. International Conference on Human-Computer Interaction , 2022.

Xuehang Guo, Anfeng Peng, Lesong Jia, Michael Lewis . Target Conspicuity for Human-UAV Visual Perception. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Xuehang Guo, Huao Li, Anfeng Peng, Lesong Jia, Brandon Rishi, Michael Lewis . Determining Human Perceptual Envelope in Fixed Wing UAV Surveillance. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Xinyue Miao, Chengqi Xue, Chuan Guo, Xiaozhou Zhou, Lichun Yang, Lesong Jia . Method and realization of multiplayer collaborative control oriented to the consultation platform. Proceedings of the 2021 5th International Conference on Electronic Information Technology and Computer Engineering , 2021.

虚拟与增强现实

我们在虚拟与增强现实交互领域的研究聚焦于为用户构建更加舒适、高效的沉浸式交互环境,重点关注界面布局优化、虚拟按键尺寸与分布设计,以及不同交互方式下的操作性能与可用性表现。我们通过系统性的用户实验与交互数据分析,评估用户在虚拟环境中完成选择、点击等基础任务时的行为特征与操作效率,提出面向虚拟交互任务的量化评估方法,为VR/AR界面控件设计提供可验证的设计依据与优化方向。

进一步地,我们的研究强调从用户多模态行为数据中理解交互过程与认知状态变化,并基于这些动态信息构建自适应的用户界面。我们结合眼动与手部动作等多模态信号,分析裸手点击与传统控制方式对用户视觉搜索策略、注意分配模式及操作负荷的影响,并探索利用多模态特征预测用户注意状态的建模方法。相关工作为沉浸式系统提供实时用户状态识别与自适应交互支持奠定基础,推动VR/AR交互设计从静态界面优化进一步迈向智能化、个性化与动态调整。

相关发表

Xiaoxi Du, Lesong Jia, Jinchun Wu, Ningyue Peng, Xiaozhou Zhou, Chengqi Xue . How bare-hand clicking influences eye movement behavior in virtual button selection tasks: a comparative analysis with keyboard control. Journal on Multimodal User Interfaces , 2025.

Xiaoxi Du, Jinchun Wu, Xinyi Tang, Xiaolei Lv, Lesong Jia, Chengqi Xue . Predicting User Attention States from Multimodal Eye--Hand Data in VR Selection Tasks. Electronics , 2025.

Xiaozhou Zhou, Yibing Guo, Lesong Jia, Yu Jin, Helu Li, Chengqi Xue . A study of button size for virtual hand interaction in virtual environments based on clicking performance. Multimedia Tools and Applications , 2023.

Jiarui Li, Xiaozhou Zhou, Lesong Jia, Weiye Xiao, Yu Jin, Chengqi Xue . A modeling method to evaluate the finger-click interactive task in the virtual environment. International Conference on Intelligent Human Systems Integration , 2021.

Xiaozhou Zhou, Yu Jin, Lesong Jia, Chengqi Xue . Study on hand--eye cordination area with bare-hand click interaction in virtual reality. Applied Sciences , 2021.

Xiaozhou Zhou, Hao Qin, Weiye Xiao, Lesong Jia, Chengqi Xue . A comparative usability study of bare hand three-dimensional object selection techniques in virtual environment. Symmetry , 2020.