Research Directions

Back to Home

Multimodal Interaction

Our multimodal interaction research aims to integrate multiple input channels such as gestures, speech, and posture to create more natural and efficient human-computer interaction methods. This research primarily encompasses two directions: scripted multimodal interaction design and natural multimodal behavior understanding.

Scripted multimodal interaction refers to predefined interaction schemes designed by developers, such as using specific gestures combined with voice commands to accomplish target selection and task execution. In such interactions, users must follow established rules and use designated multimodal inputs to convey their intentions to the system. Our research in this direction focuses on exploring users' multimodal usage patterns and preferences across different scenarios and tasks, analyzing users' semantic understanding capabilities and encoding spaces for different modal information, thereby designing interpretable, learnable, and generalizable scripted multimodal interaction schemes for various interaction functions.

On the other hand, natural multimodal behavior understanding emphasizes allowing users to interact with systems using multimodal behaviors in a more free-form manner. Compared to scripted approaches, this method better aligns with user intuition, with natural and fluid interaction processes and minimal additional learning costs. However, this also places higher demands on multimodal behavior recognition and intent inference algorithms. To this end, we start from the collection and analysis of users' natural multimodal behaviors, combining traditional machine learning methods with large language models to build intelligent interaction models capable of understanding natural multimodal expressions, supporting more robust and generalizable human-computer interaction systems.

Related Publications

Lesong Jia, Xiaozhou Zhou, Chengqi Xue . Non-trajectory-based gesture recognition in human-computer interaction based on hand skeleton data. Multimedia Tools and Applications , 2022.

Lesong Jia, Xiaozhou Zhou, Hao Qin, Ruidong Bai, Liuqing Wang, Chengqi Xue . Research on discrete semantics in continuous hand joint movement based on perception and expression. Sensors , 2021.

Xiaozhou Zhou, Lesong Jia, Ruidong Bai, Chengqi Xue . DigCode—a generic mid-air gesture coding method on human-computer interaction. International Journal of Human-Computer Studies , 2024.

Xiaoxi Du, Lesong Jia, Weiye Xiao, Xiaozhou Zhou, Mu Tong, Jinchun Wu, Chengqi Xue . Speech+ Posture: A Method for Interaction with Multiple and Large Interactive Displays. Intelligent Human Systems Integration (IHSI 2022) , 2022.

Ming Hao, Zhou Xiaozhou, Xue Chengqi, Xiao Weiye, Jia Lesong . The Influence of the Threshold of the Size of the Graphic Element on the General Dynamic Gesture Behavior. International Conference on Human Systems Engineering and Design , 2019.

Robot Interaction

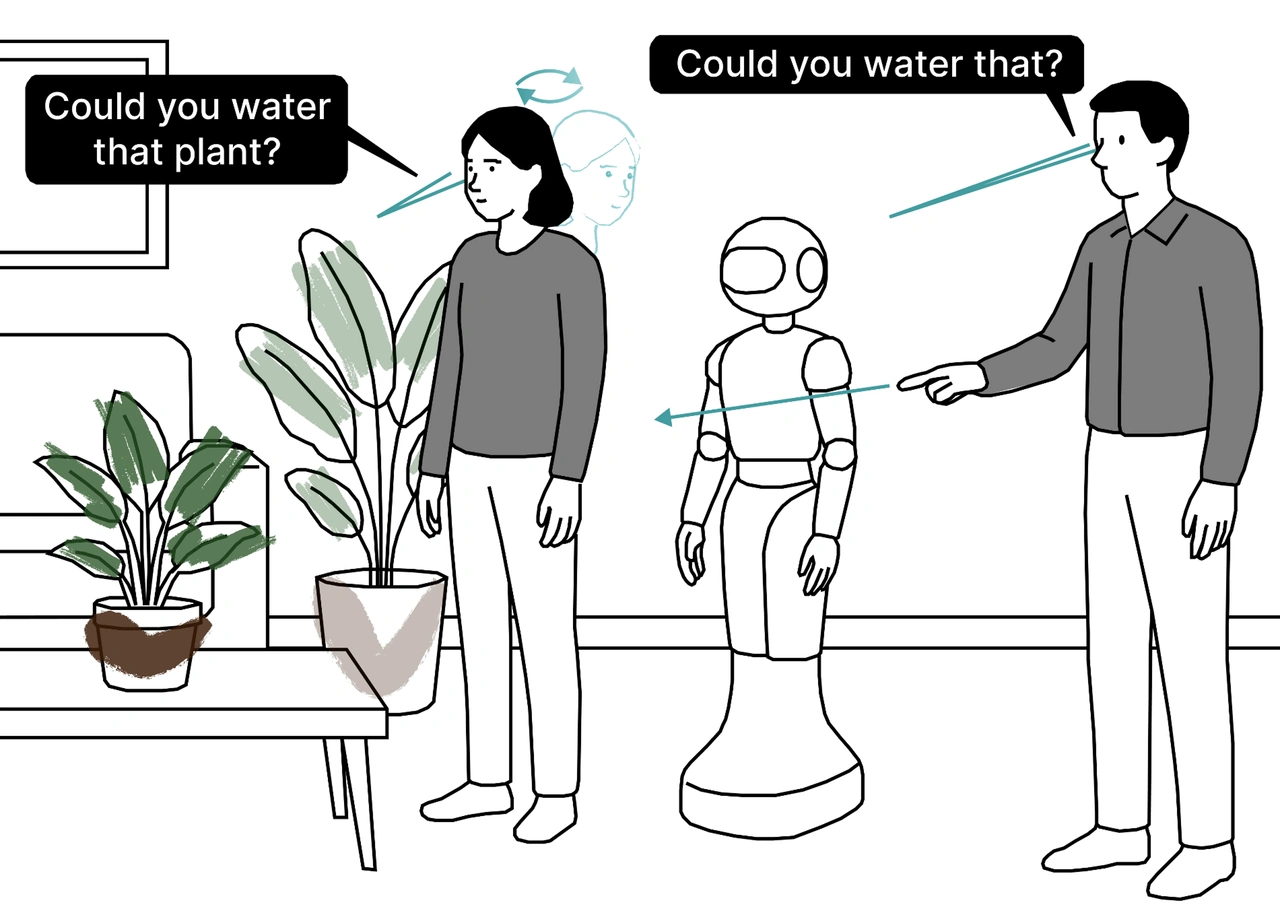

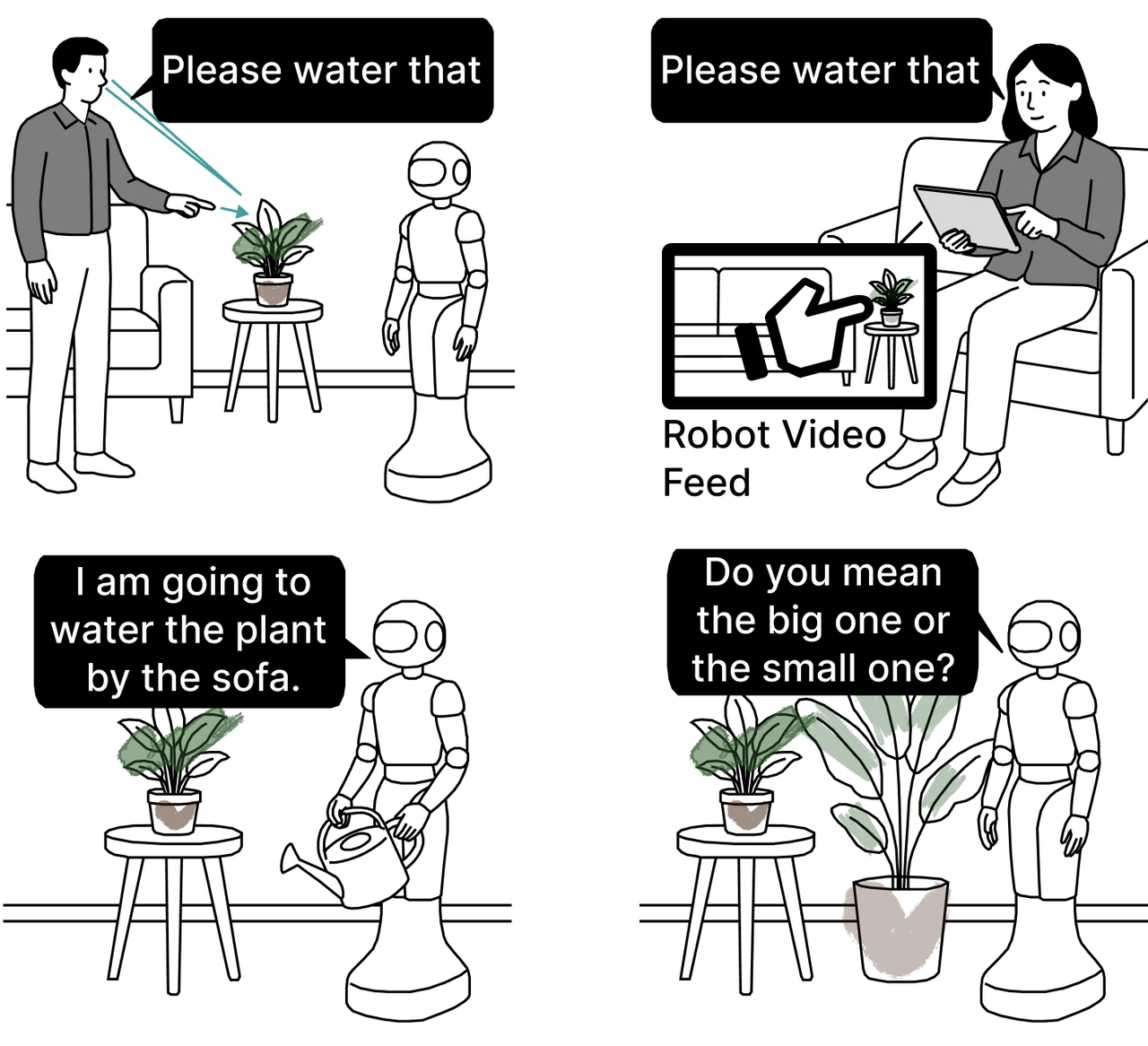

Our robot interaction research focuses on user-centered communication mechanisms, aiming to enhance the usability, comprehensibility, and trustworthiness of robotic systems through appropriate interaction and feedback modalities, making them better aligned with user needs and usage patterns.

In our specific research, we emphasize exploring users' core expectations regarding functional capabilities and interface information presentation when collaborating with robots to complete tasks, and summarizing the main difficulties and decision-making burdens users encounter during interactions. Through user studies, we analyze design considerations for robotic systems in task planning, execution feedback, operational transparency, and information presentation methods. We further investigate preference differences among different feedback modalities (such as visual and auditory) in robot control and interaction, as well as their impacts on interaction efficiency, workload, and user experience, thereby providing empirical evidence and design recommendations for more natural and efficient human-robot collaboration interfaces.

Additionally, we treat emotional factors as an important dimension affecting human-robot interaction quality, systematically studying the mechanisms through which robot emotional expression and emotion regulation strategies influence user feelings and behaviors. We focus on exploring how different emotion regulation approaches (such as empathetic versus non-empathetic regulation) affect user emotional changes, trust formation, advice adoption tendencies, and overall interaction evaluations across different contexts. Through experimental paradigms such as video and simulation tasks, we establish analytical frameworks to provide theoretical and experimental support for building robot interaction strategies with greater social intelligence and emotional adaptability.

Related Publications

Lesong Jia, Jackie Ayoub, Danyang Tian, Miao Song, Ehsan Moradi Pari, Na Du . Robots with Heart: How Emotion Regulation Shapes Human-Robot Interaction. International Journal of Social Robotics , 2026.

Lesong Jia, Breelyn Kane Styler, Na Du . More Than Automation: User Insights into the Functionality and Interface of Wheelchair-Mounted Robotic Arms. 2025 20th ACM/IEEE International Conference on Human-Robot Interaction (HRI) , 2025.

Lesong Jia, Jackie Ayoub, Danyang Tian, Miao Song, Ehsan Moradi Pari, Na Du . Emotion Regulation in Human-Robot Interaction: Insights from Video and Game Simulations. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2025.

Breelyn Kane Styler, Lesong Jia, Henny Admoni, Reid Simmons, Rory Cooper, Na Du, Dan Ding . Evaluating Feedback Modality Preferences of Power Wheelchair Users During Manual Robotic Arm Control. 2025 International Conference On Rehabilitation Robotics (ICORR) , 2025.

Situation Awareness

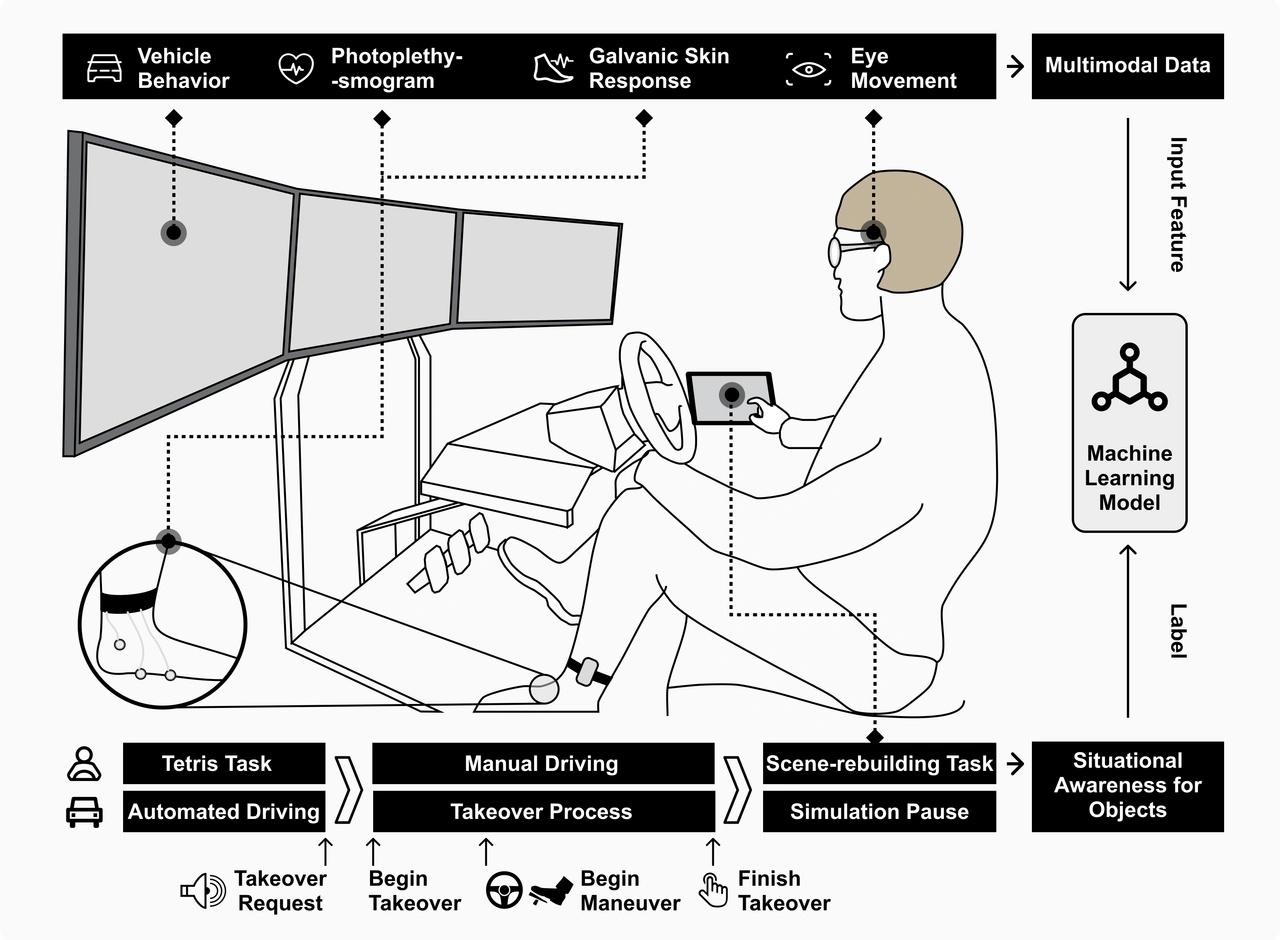

Situation awareness refers to an individual's ability to perceive and understand critical information in dynamic environments, as well as predict future states. In high cognitive load, high information density application scenarios such as autonomous driving takeovers and UAV reconnaissance, whether users possess accurate situation awareness directly impacts their decision-making quality and task safety. Therefore, identifying and enhancing users' situation awareness levels has become a critical issue in intelligent system design. Our research primarily focuses on two directions: situation awareness state prediction and enhancing situation awareness through interface design.

First, we have constructed a comprehensive framework for situation awareness prediction that systematically integrates multiple influencing factors including user capabilities, user states, user behaviors, and situational contexts. Under this framework, we combine individual difference characteristics such as user age, gender, and driving experience, along with physiological and behavioral signals such as eye movements, electrocardiography, and skin conductance, with environmental features such as the size and color of critical targets in the scene, to build situation awareness state prediction models. These models can help systems identify situation information that users may miss or misinterpret, and further generate targeted prompts and assistance information, thereby supporting more timely and reliable decision-making.

Furthermore, we investigate the extent to which different types of user interfaces can help users more effectively grasp situational information. Related research covers multiple dimensions including content design for interface information presentation, presentation modalities (such as visual, auditory, or multimodal fusion), and information organization and expression methods. Through user experiments and statistical analysis, we strive to summarize interface design patterns across different task scenarios and propose generalizable design recommendations for situation awareness assistance interfaces, aiming to improve user comprehension efficiency, reduce cognitive load, and enhance task safety and overall interaction experience.

Related Publications

Lesong Jia, Kiran Shridhar Alva, Huao Li, Werner Hager, Lucia Romero, Timothy Albert Butler, and Michael Lewis . Understanding Situation Awareness in Multi-UAV Supervision: Effects of Environmental Complexity, Interface Design, and Human–AI Differences. International Journal of Human--Computer Interaction , 2026.

Lesong Jia, Na Du . Modeling Driver Situational Awareness in Takeover Scenarios Using Multimodal Data and Machine Learning. International Journal of Human--Computer Interaction , 2025.

Lesong Jia, Na Du . Driver Situational Awareness Prediction During Takeover Transitions: A Multimodal Machine Learning Approach. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2024.

Lesong Jia, Anfeng Peng, Huao Li, Xuehang Guo, Michael Lewis . Situation Theory Based Query Generation for Determining Situation Awareness Across Distributed Data Streams. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Lesong Jia, Chenglue Huang, Na Du . Drivers' situational awareness of surrounding vehicles during takeovers: Evidence from a driving simulator study. Transportation Research Part F: Traffic Psychology and Behaviour , 2024.

Lesong Jia, Na Du . Effects of traffic conditions on driver takeover performance and situational awareness. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2023.

Yaohan Ding, Lesong Jia, Na Du . Watch Out for Explanations: Information Type and Error Type Affect Trust and Situational Awareness in Automated Vehicles. IEEE Transactions on Human-Machine Systems , 2025.

Yaohan Ding, Lesong Jia, Na Du . One size does not fit all: Designing and evaluating criticality-adaptive displays in highly automated vehicles. Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems , 2024.

Yaohan Ding, Lesong Jia, Na Du . Designing for trust and situational awareness in automated vehicles: effects of information type and error type. Proceedings of the Human Factors and Ergonomics Society Annual Meeting , 2023.

Xiaoxi Du, Lesong Jia, Xiaozhou Zhou, Xinyue Miao, Weiye Xiao, Chengqi Xue . Trackable and Personalized Shortcut Menu Supporting Multi-user Collaboration. International Conference on Human-Computer Interaction , 2022.

Xuehang Guo, Anfeng Peng, Lesong Jia, Michael Lewis . Target Conspicuity for Human-UAV Visual Perception. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Xuehang Guo, Huao Li, Anfeng Peng, Lesong Jia, Brandon Rishi, Michael Lewis . Determining Human Perceptual Envelope in Fixed Wing UAV Surveillance. 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS) , 2024.

Xinyue Miao, Chengqi Xue, Chuan Guo, Xiaozhou Zhou, Lichun Yang, Lesong Jia . Method and realization of multiplayer collaborative control oriented to the consultation platform. Proceedings of the 2021 5th International Conference on Electronic Information Technology and Computer Engineering , 2021.

Virtual and Augmented Reality

Our research in virtual and augmented reality interaction focuses on creating more comfortable and efficient immersive interaction environments for users, with particular emphasis on interface layout optimization, virtual button size and distribution design, and operational performance and usability across different interaction modalities. Through systematic user experiments and interaction data analysis, we evaluate users' behavioral characteristics and operational efficiency when completing fundamental tasks such as selection and clicking in virtual environments, proposing quantitative evaluation methods for virtual interaction tasks and providing verifiable design foundations and optimization directions for VR/AR interface control design.

Furthermore, our research emphasizes understanding interaction processes and cognitive state changes from users' multimodal behavioral data, and building adaptive user interfaces based on these dynamic information. We combine multimodal signals such as eye movements and hand gestures to analyze the impacts of bare-hand clicking and traditional control methods on users' visual search strategies, attention allocation patterns, and operational load. We also explore modeling methods that leverage multimodal features to predict users' attention states. This work establishes the foundation for providing real-time user state recognition and adaptive interaction support for immersive systems, advancing VR/AR interaction design from static interface optimization toward intelligent, personalized, and dynamic adaptation.

Related Publications

Xiaoxi Du, Lesong Jia, Jinchun Wu, Ningyue Peng, Xiaozhou Zhou, Chengqi Xue . How bare-hand clicking influences eye movement behavior in virtual button selection tasks: a comparative analysis with keyboard control. Journal on Multimodal User Interfaces , 2025.

Xiaoxi Du, Jinchun Wu, Xinyi Tang, Xiaolei Lv, Lesong Jia, Chengqi Xue . Predicting User Attention States from Multimodal Eye--Hand Data in VR Selection Tasks. Electronics , 2025.

Xiaozhou Zhou, Yibing Guo, Lesong Jia, Yu Jin, Helu Li, Chengqi Xue . A study of button size for virtual hand interaction in virtual environments based on clicking performance. Multimedia Tools and Applications , 2023.

Jiarui Li, Xiaozhou Zhou, Lesong Jia, Weiye Xiao, Yu Jin, Chengqi Xue . A modeling method to evaluate the finger-click interactive task in the virtual environment. International Conference on Intelligent Human Systems Integration , 2021.

Xiaozhou Zhou, Yu Jin, Lesong Jia, Chengqi Xue . Study on hand--eye cordination area with bare-hand click interaction in virtual reality. Applied Sciences , 2021.

Xiaozhou Zhou, Hao Qin, Weiye Xiao, Lesong Jia, Chengqi Xue . A comparative usability study of bare hand three-dimensional object selection techniques in virtual environment. Symmetry , 2020.